CSCW best paper: "Peer Recommendation Interventions for Health-related Social Support"

This post is an adaptation of my CSCW 2025 presentation, which is available on YouTube. A shorter summary was previously published here. This post was mirrored on the GroupLens blog.

Can recommendation systems help people find support online?

Back in 2021, I did a study looking at the feasibility of peer recommendation interventions for health-related social support. The published paper just won a Best Paper award at CSCW 2025, so I want to tell you about the system we built and why we think researchers should build recommendation systems to help people find online support.

Social support is a key determinant of mental and physical health. We also know that support is particularly important during health journeys. But, a lot of the social support that people undergoing a health journey might benefit from is unavailable in their existing support networks. In particular, people benefit from support specifically from others with similar but potentially uncommon experiences.

Unfortunately, actually finding and connecting with these people – with peers – is logistically hard. For example, it’s one of the reasons hospitals organize condition-specific support groups. Fortunately, the internet exists!

Finding support during health journeys is not easy. CC BY-SA 4.0

Finding support during health journeys is not easy. CC BY-SA 4.0

Online communities organized around health (creatively called online health communities) offer the promise of a space where people can seek and receive support. But even in online health communities we have a discovery problem.

Imagine a highly-motivated support seeker:

- I have some kind of support need in mind.

- I’m interested in connecting with peers.

- …now I need to wade through thousands of forum threads.

Not great.

There are a lot of design opportunities here, but a suggestion that comes up again and again is recommendation.

Read a Computer-Supported Cooperative Work paper looking at an online health community, one of the design implications will probably involve improving peer recommendation systems. (I wrote a paper like that too.) But empirical research on systems designed for connecting peers in online health communities is remarkably rare.

My challenge to the community is to treat recommendation as a serious intervention into people’s social networks. That means we need to design the intervention with particular health benefits in mind.

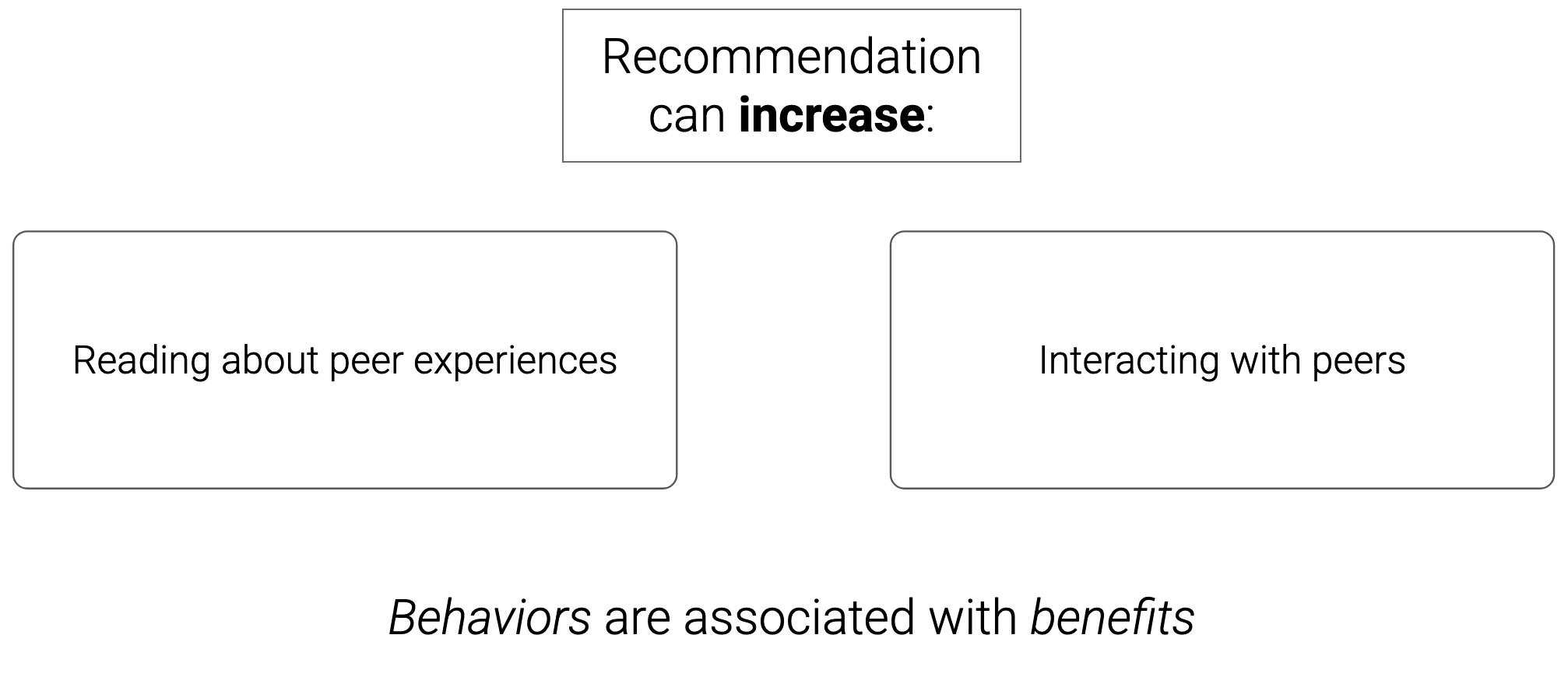

In this case, we’re designing our intervention to increase two behaviors that are plausibly associated with health benefits:

- Reading about the experiences of peers

- Interacting with peers

In general, we don’t know that recommendation will actually increase these behaviors, nor do we know that increasing these behaviors is actually linked with health benefits. This is a classic “causal gap”: we have some associational evidence that manipulation could help, but no strong causal evidence.

Two behaviors that we hope are associated with health benefits. CC BY-SA 4.0

Two behaviors that we hope are associated with health benefits. CC BY-SA 4.0

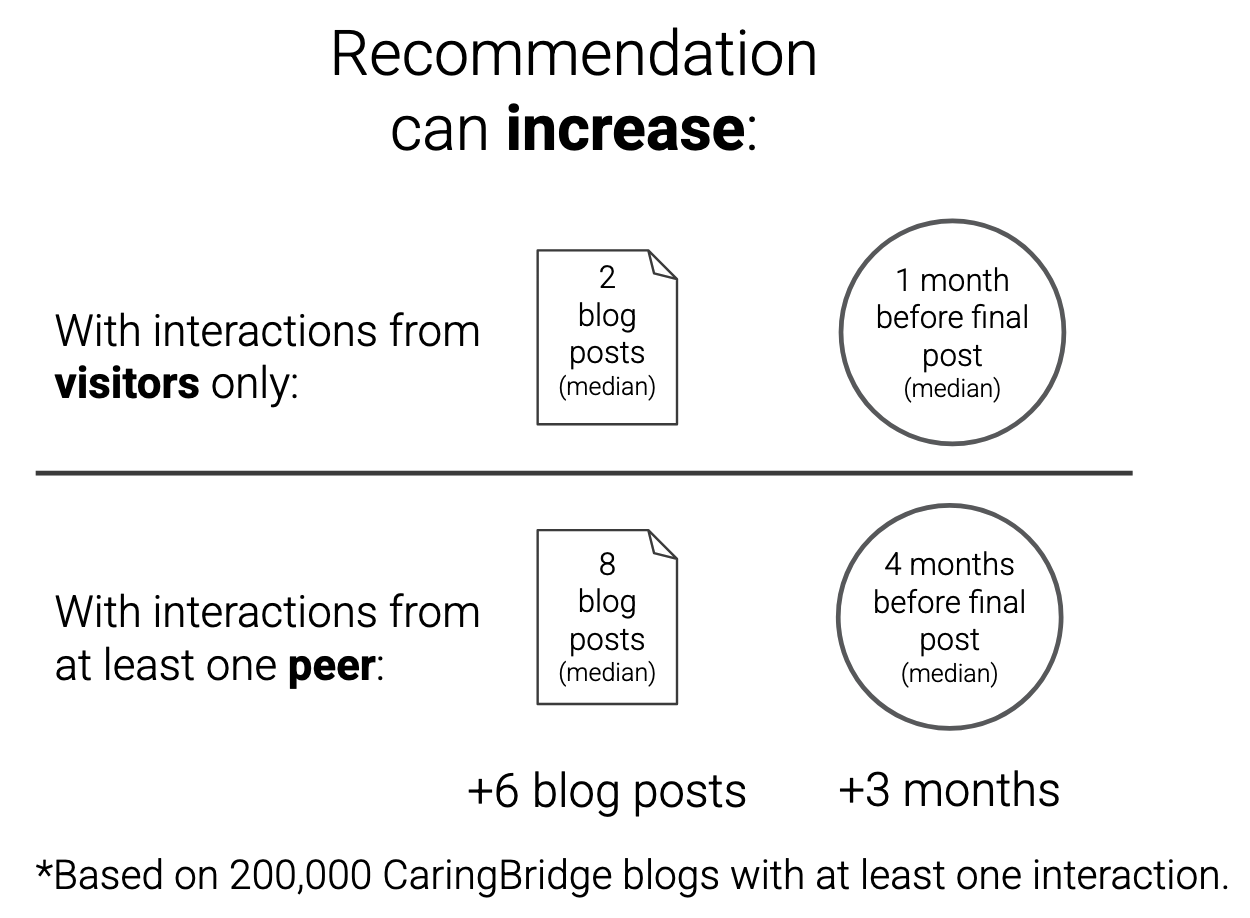

The associational evidence is quite good. For example, we ran our study on CaringBridge.org, a health blogging platform. On CaringBridge, receiving interactions from peer blog authors was associated with an additional 6 blog posts and an additional 3 months of activity on the site.

Associational evidence shows a relationship between peer interactions and online behaviors. CC BY-SA 4.0

Associational evidence shows a relationship between peer interactions and online behaviors. CC BY-SA 4.0

That’s a huge effect size… if there’s a strong causal relationship between peer interaction and engagement.

What we want is to evaluate efficacy. Will the recommendation intervention actually increase desired behaviors? The conventional next step is to run a randomized controlled trial (RCT). But running an RCT is hard and expensive! There are too many open questions about the intervention, and too many degrees of researcher freedom to collect high-quality evidence. Instead, let’s run a feasibility study first.

This concept comes mostly from the health sciences (see Bowen et al. 2009) and is greatly underused in human–computer interaction research. We will try to collect some data about efficacy, but our focus is on all the preliminaries:

- The demand for the intervention

- The acceptability of the intervention to participants

- How practical and adaptable the intervention is

- Specific challenges in implementation

Our goal is to triangulate overall feasibility by collecting evidence in each of these areas.

System design

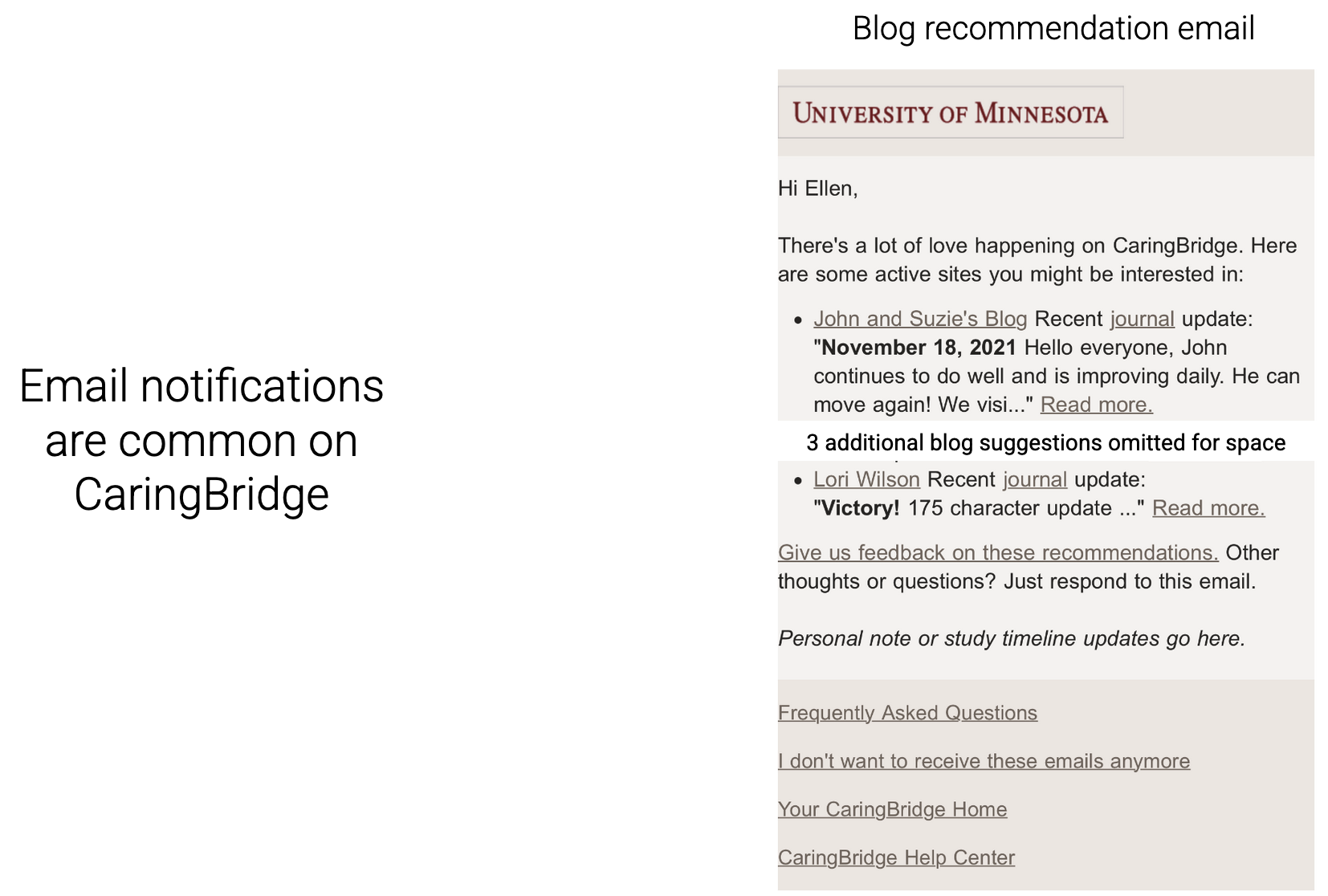

By embracing the feasibility study mindset, we want to be practical. Email notifications are common on CaringBridge. So, we copied the design of existing author notifications into a new “blog recommendation email” with five links.

To generate recommendations, we trained a deep learning recommendation model from historical interaction data we already had. The goal of a feasibility study is to surface useful insights, so we also did a bunch of experiments about what data it’s useful to have if you’re trying to do this… see the paper appendices for details.

Field study

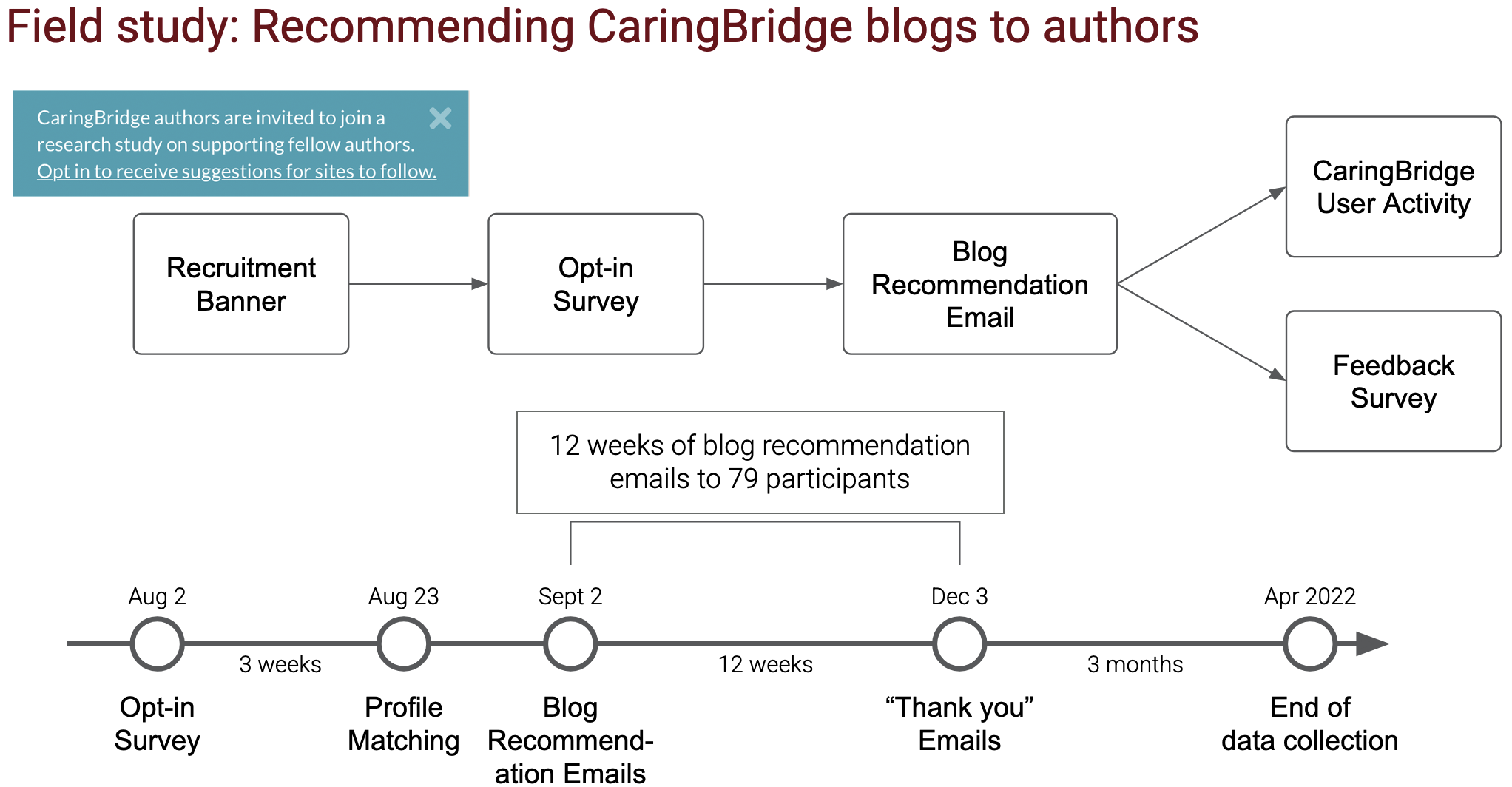

Field study flowchart and timeline. CC BY-SA 4.0

Field study flowchart and timeline. CC BY-SA 4.0

We threw up a recruitment banner and got CaringBridge authors to take a survey. We got 79 people to sign up for 12 weeks of emails. Then we collected data for 3 months after the study so we could estimate efficacy over a slightly longer term.

Sidebar: Recruitment survey

We got a bunch of interesting data in the recruitment survey. The table below shows the percentage of the ~100 respondents who checked various motivations for connecting with peers and various characteristics that make a peer connection valuable.

| Motivations for peer connection | % checked | Desired peer characteristics | % checked |

|---|---|---|---|

| Learn from others | 80% | Similar diagnosis or symptoms | 84% |

| Communicate with peers | 46% | Similar treatment | 54% |

| Receive experienced support | 44% | High-quality writing | 51% |

| Mentor newer authors | 29% | Same caregiver relationship | 32% |

| Not interested, but maybe in the future | 6% | Lives near me | 25% |

| Never interested | 2% | Similar cultural background | 14% |

| Not interested, but maybe in the past | 0% | ||

| Something else | 9% | Something else | 8% |

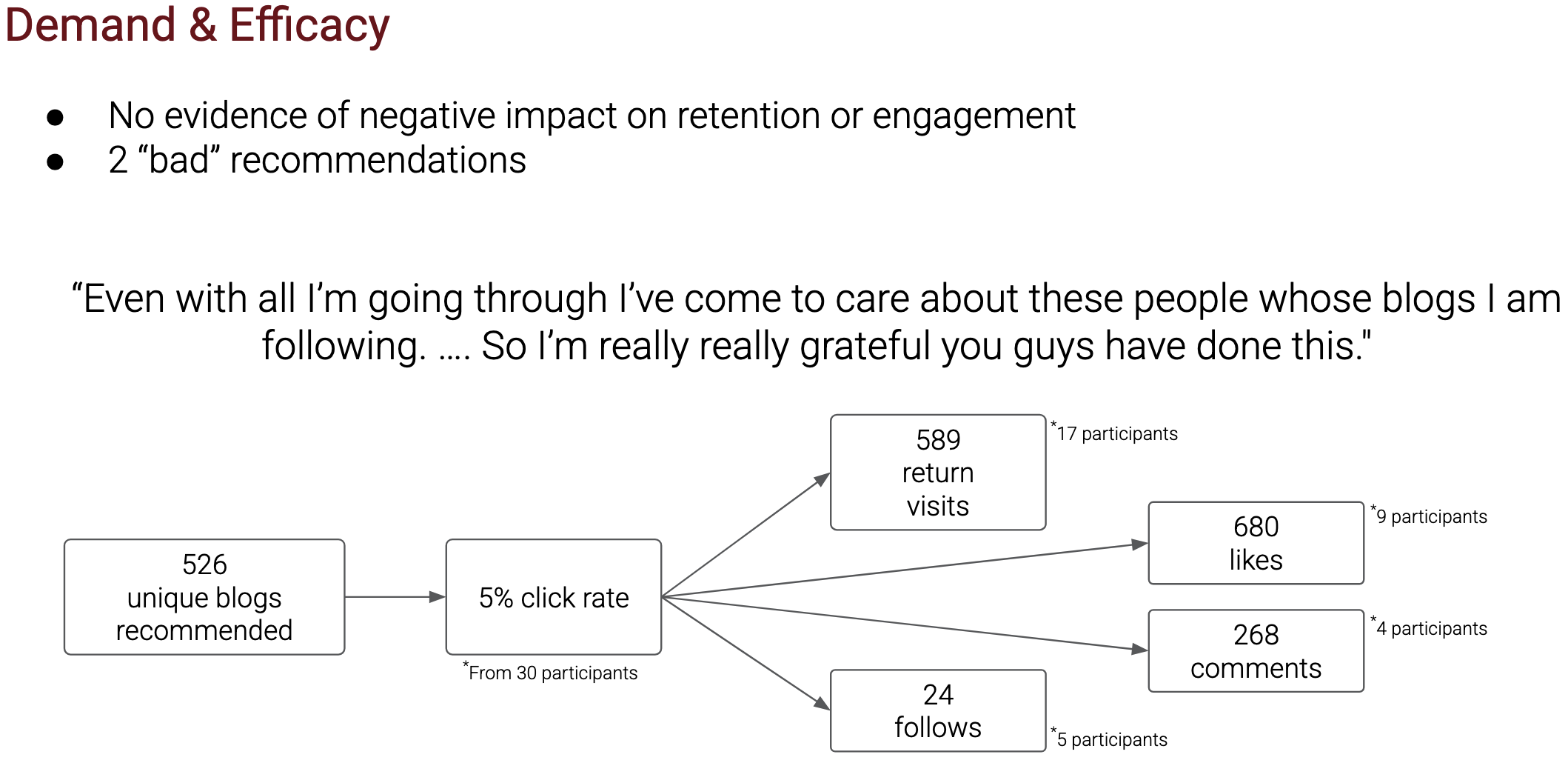

Here are our results, condensed to one image:

Summary of results. CC BY-SA 4.0

Summary of results. CC BY-SA 4.0

No apparent harms, either qualitatively or in terms of retention and engagement. One participant objected to 2 recommendations, otherwise people mostly didn’t give us feedback on the recommendations. We also heard some really nice feedback from participants.

Recommendations didn’t seem to hurt, and they caused hundreds of new likes and comments. Now, we have the confidence to do a full-blown RCT.

During this study, we learned a bunch of fiddly, practical things that I hope will be really helpful for other people designing peer recommendation systems. If you’re interested in peer recommendation or peer matching at all, check out the paper for more details.

Here’s the full paper citation:

Zachary Levonian, Matthew Zent, Ngan Nguyen, Matthew McNamara, Loren Terveen, and Svetlana Yarosh. 2025. Peer Recommendation Interventions for Health-related Social Support: a Feasibility Assessment. Proc. ACM Hum.-Comput. Interact. 9, 2, Article CSCW146 (April 2025), 59 pages. https://doi.org/10.1145/3711044

A preprint is available on arXiv: http://arxiv.org/abs/2209.04973