Tutor Co-pilot: real-time scaffolding for math tutors

Tutor Co-pilot: a prototype interface to assist math tutors.

Tutor Co-pilot: a prototype interface to assist math tutors.

I recently implemented a tutor “copilot” in collaboration with the team at Carnegie Mellon working on PLUS. PLUS (Personalized Learning Squared) is training and management software for math tutors.

Tutor Co-pilot is a new feature for PLUS to provide real-time scaffolding for math problems.

According to instructor Rebecca Alber:

Scaffolding is breaking up the learning into chunks and providing a tool, or structure, with each chunk.

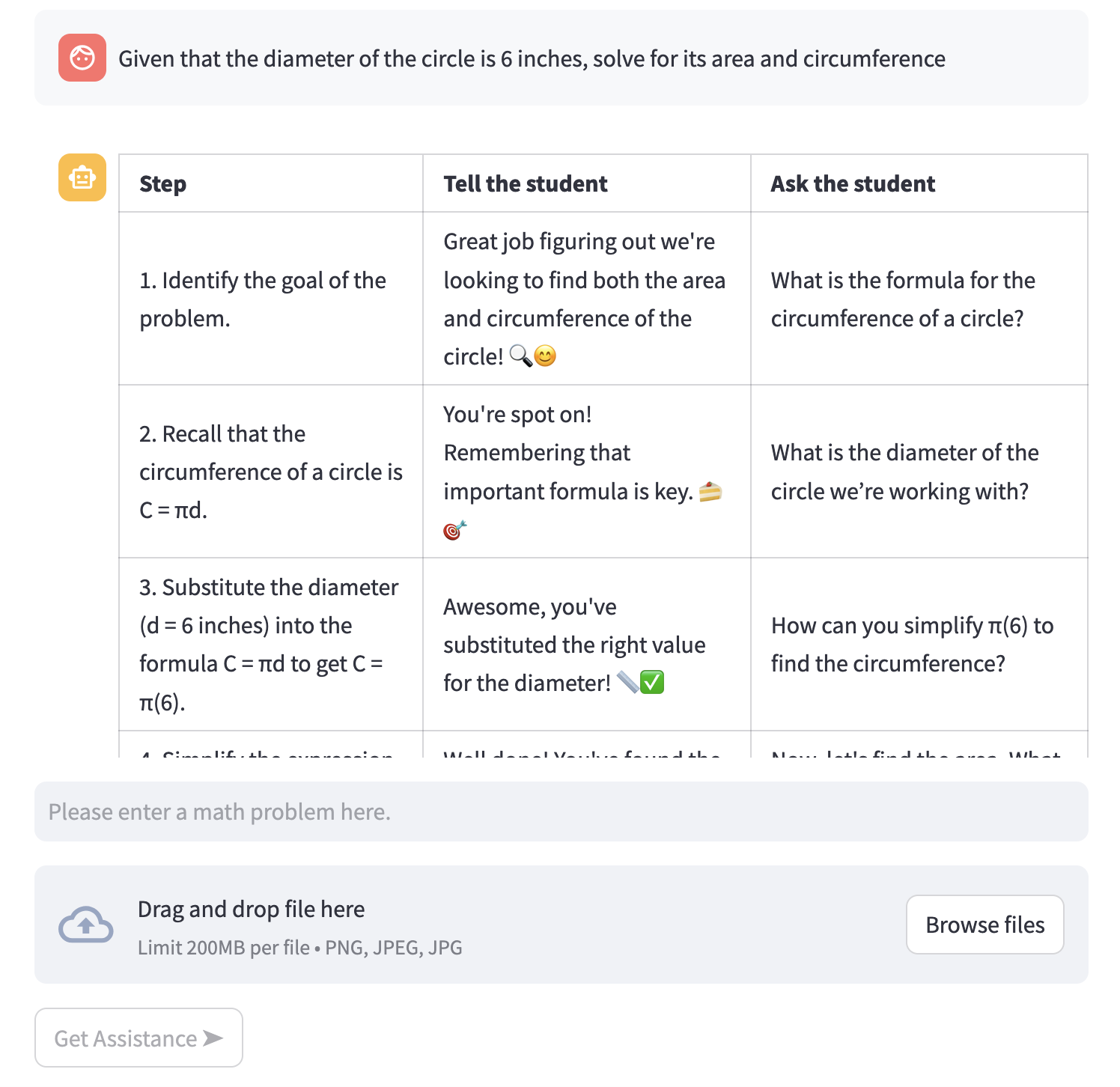

The Tutor Co-pilot works by having tutors copy-paste or upload a screenshot of the problem a student is working on. The Tutor Co-pilot then shows a structured table with three columns:

- Steps: a step-by-step guide on how to solve the problem

- Tell The Student: a bit of positive reinforcement appropriate to that step of the problem

- Ask The Student: an open-ended, strategic question motivating students to actively participate and think independently about the problem-solving goal

A structured table helps tutors quickly scaffold a problem. The table is generated by OpenAI’s GPT-4o LLM.

A structured table helps tutors quickly scaffold a problem. The table is generated by OpenAI’s GPT-4o LLM.

Tutors choose what they want to use from the table to improve their communication with students about that math problem.

Naturally, we used large language models (LLMs) to actually generate the table we show to students. One advantage of the “Co-pilot” design is that it’s acceptable (but not preferable) to show hallucinations to tutors in a way that it isn’t for students. The tutor will always serve as a buffer between the LLM output and the student. For the tutor, Tutor Co-pilot can function as a safety net for moments when they find themselves unsure or stuck.

I worked closely with designers Tina Chen and Zhiyuan Chen on Tutor Co-pilot. Tina has a more detailed write-up about the design process on her website.

A use of LLMs that I did not expect for this project was during the first phase of design. Tina and Zhiyuan used multiple LLMs to generate initial seed ideas for discussion, rather than processes I’ve used before like IDEO’s brainstorming process. This idea is really intriguing, although I’m a bit skeptical. “Can LLMs Generate Novel Research Ideas? A Large-Scale Human Study with 100+ NLP Researchers” (2024) explored the use of LLMs for research idea generation and argued that LLMs produced ideas that were more novel but less technically feasible. I’m sure there’s a lot more work going on in this space right now, and I’m curious to see how LLMs will best fit into the early design process.

Edit: Unsurprisingly, the name Tutor Co-pilot was being independently used by a team at Stanford. Their prototype - detailed on arXiv - focuses on text-based tutoring. Congrats to Rose Wang and the rest of the team for their useful design and compelling randomized controlled trial. See also Rose Wang and Megha Srivastava’s blog post “Productive Struggle: The Future of Human Learning in the Age of AI”.